Featured Research

The ultimate goal of our research is to enable trustworthy, interactive, and human-centered autonomous embodied agents that can perceive, understand, and reason about the physical world; safely interact and collaborate with humans; and efficiently coordinate with other intelligent agents so that they can benefit society in daily lives. To accomplish this goal, our team has been pursuing interdisciplinary research that develops fundamental theories and practical algorithms grounded in robotics, machine learning, reinforcement learning, computer vision, control theory, and optimization, which are validated on various robotic hardware platforms such as humanoid mobile manipulators, quadrupeds, autonomous vehicles, manipulators, and drones.

Although autonomous navigation in simple, static environments has been well studied, it remains challenging for robots to navigate

in highly dynamic, interactive scenarios (e.g., intersections, narrow corridors) where humans are involved.

Robots must learn a safe and efficient behavior policy that can model the interactions, take into account the uncertainties among

the interactions during decision making, coordinate with surrounding static and dynamic entities, and generalize to

out-of-distribution (OOD) situations.

In our research, we have

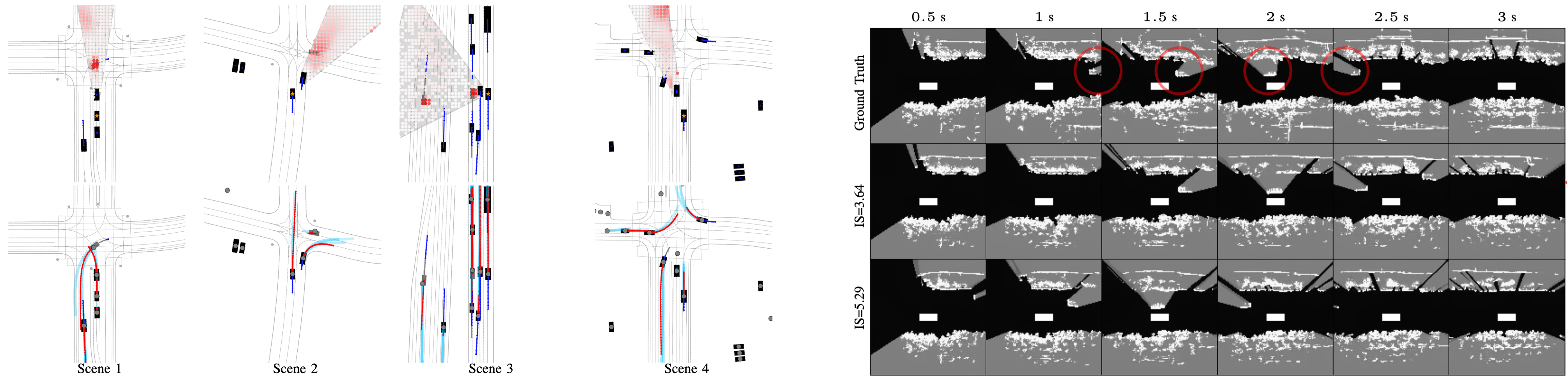

1) introduced a novel interaction-aware decision making framework for autonomous vehicles based on deep reinforcement learning (DRL),

which integrates human internal state inference, domain knowledge, trajectory prediction, and counterfactual reasoning systematically;

2) developed a novel guided meta RL paradigm to improve the generalizability of learned policies and an importance sampling based

training mechansim for unbiased policy learning;

3) investigated DRL methods that leverage the learned pairwise and group-wise relations for social robot navigation around human crowds;

and 4) proposed the first DRL framework that integrates the prediction uncertainty of pedestrians obtained from adaptative conformal

inference and explicitly guides the policy learning process in a principled manner for social navigation.

These approaches achieve superior performance in the corresponding tasks and provide explainable, human-understandable intermediate

representations to build trust with humans.

Related Publications:

11. Towards Generalizable Safety in Crowd Navigation via Conformal Uncertainty Handling, Conference on Robot Learning (CoRL 2025).

10. Multi-Agent Dynamic Relational Reasoning for Social Robot Navigation, submitted to IEEE Transactions on Robotics (T-RO), under review.

9. Human Implicit Preference-Based Policy Fine-tuning for Multi-Agent Reinforcement Learning in USV Swarm, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2025).

8. Importance Sampling-Guided Meta-Training for Intelligent Agents in Highly Interactive Environments, IEEE Robotics and Automation Letters (RA-L), 2025.

7. Interactive Autonomous Navigation with Internal State Inference and Interactivity Estimation, IEEE Transactions on Robotics (T-RO), 2024.

6. Robust Driving Policy Learning with Guided Meta Reinforcement Learning, ITSC 2023.

5. Game Theory-Based Simultaneous Prediction and Planning for Autonomous Vehicle Navigation in Crowded Environments, ITSC 2023.

4. Autonomous Driving Strategies at Intersections: Scenarios, State-of-the-Art, and Future Outlooks, ITSC 2021.

3. Reinforcement Learning for Autonomous Driving with Latent State Inference and Spatial-Temporal Relationships, ICRA 2021.

2. Orientation-Aware Planning for Parallel Task Execution of Omni-Directional Mobile Robot, IROS 2021.

1. Safe and Feasible Motion Generation for Autonomous Driving via Constrained Policy Net, IECON 2017.

While larger language models (LLMs) and vision-language models (VLMs) have demonstrated remarkable proficiency in comprehending natural language and translating human instructions into detailed plans for straightforward robotic tasks, they encounter significant difficulties when tackling long-horizon complex tasks.

In particular, the challenges of sub-task identification and allocation become especially complicated in scenarios involving cooperative teams of heterogeneous robots.

To address these challenges, we have proposed a novel multi-agent task planning framework designed to excel in long-horizon tasks, which integrates the reasoning capabilities of LLMs with traditional heuristic search planning, which achieves high success rates and efficiency while demonstrating robust generalization across various tasks.

This research not only contributes to the advancement of task planning for heterogeneous robotic teams but also lays the groundwork for future explorations in multi-agent collaboration.

Complementary to this multi-agent planning line, our work on Retrieval-based Demonstration Decomposer (RDD) tackles the core problem of long-horizon manipulation for a single embodied agent: how to decompose complex demonstrations into executable sub-tasks that align with a robot’s existing visuomotor skills.

Instead of relying on hand-crafted rules or generic unsupervised methods, RDD leverages a small set of human-annotated sub-tasks as references and retrieves visually similar intervals to decompose new demonstrations. This retrieval-based sub-task segmentation significantly improves the performance of hierarchical VLA policies on RLBench and real-world manipulation tasks, and, crucially, is not limited to hierarchical VLAs: the same mechanism can serve as a general-purpose sub-task annotation tool for a wide range of long-horizon manipulation and sequential decision-making problems.

Related Publications:

9. RDD: Retrieval-Based Demonstration Decomposer for Planner Alignment in Long-Horizon Tasks, 39th Conference on Neural Information Processing Systems (NeurIPS 2025).

8. LaMMA-P: Generalizable Multi-Agent Long-Horizon Task Allocation and Planning with LM-Driven PDDL Planner, International Conference on Robotics and Automation (ICRA 2025).

7. HEAL: An Empirical Study on Hallucinations in Embodied Agents Driven by Large Language Models, Conference on Empirical Methods in Natural Language Processing (EMNLP 2025 Findings).

6. TrajEvo: Trajectory Prediction Heuristics Design via LLM-driven Evolution, AAAI Conference on Artificial Intelligence (AAAI 2026).

5. Conformal Prediction and MLLM aided Uncertainty Quantification in Scene Graph Generation, International Conference on Computer Vision and Pattern Recognition (CVPR 2025).

4. Generative AI for Autonomous Driving: Frontiers and Opportunities, under review.

3. Can Large Vision Language Models Read Maps Like a Human?, under review.

2. Preference VLM: Leveraging VLMs for Scalable Preference-Based Reinforcement Learning, under review.

1. VLM-3R: Vision-Language Models Augmented with Instruction-Aligned 3D Reconstruction, under review.

Mobile robots and autonomous vehicles rely heavily on onboard sensors for perceiving and understanding the surroundings,

and thereby learning safe and efficient planning strategies. While deep learning-based perception methods demonstrate impressive abilities in various tasks,

including 2D/3D object detection, occupancy prediction, segmentation, tracking, such dependency is vulnerable to situations with occlusions, impaired visibility, and long-range perception.

With the advancement of multi-agent communication, connected and automated vehicles (CAVs) can overcome the inherent limitations of single autonomous vehicle and mobile robots can collaboratively navigate complex environments.

Our research firstly demonstrates enhanced situational awareness by sharing information between CAVs on both perception and motion prediction modules.

Our framework design is robust to tolerate realistic V2X bandwidth limitations and transmission delays. Through extensive experiments and ablation studies on both simulated

and real-world V2V datasets, we demonstrate the effectiveness of our method in cooperative perception, tracking, and motion prediction.

This work advances multi-agent cooperation in robotics and autonomous driving systems.

Related Publications:

4. CMP: Cooperative Motion Prediction with Multi-Agent Communication, IEEE Robotics and Automation Letters (RA-L), 2025.

3. STAMP: Scalable Task And Model-agnostic Collaborative Perception, International Conference on Learning Representations (ICLR 2025).

2. CoMamba: Real-time Cooperative Perception Unlocked with State Space Models, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2025).

1. LaMMA-P: Generalizable Multi-Agent Long-Horizon Task Allocation and Planning with LM-Driven PDDL Planner, International Conference on Robotics and Automation (ICRA 2025).

We investigate foundation models and vision language models (VLMs) for robotics and autonomous systems to enhance their reasoning capability and reliability.

For example, inferring the short-term and long-term intentions of traffic participants and understanding the contextual semantics of scenes are the keys to scene understanding and situational awareness of autonomous vehicles.

Moreover, how to enable autonomous agents (e.g., self-driving cars) to explain their reasoning, prediction, and decision making processes to human users (e.g., drivers, passengers) in a human understandable form (e.g., natural language) to build humans’ trust remains largely underexplored.

Therefore, we created the first multimodal dataset for a new risk object ranking and natural language explanation task in urban scenarios and a rich dataset for intention prediction in autonomous driving, establishing benchmarks for corresponding tasks. Meanwhile, our research introduced novel methods that achieve superior performance on these problems.

Related Publications:

4. Rank2Tell: A Multimodal Dataset for Joint Driving Importance Ranking and Reasoning, WACV 2024.

3. DRAMA: Joint Risk Localization and Captioning in Driving, WACV 2023.

2. Important Object Identification with Semi-Supervised Learning for Autonomous Driving, ICRA 2022.

1. LOKI: Long Term and Key Intentions for Trajectory Prediction, ICCV 2021.

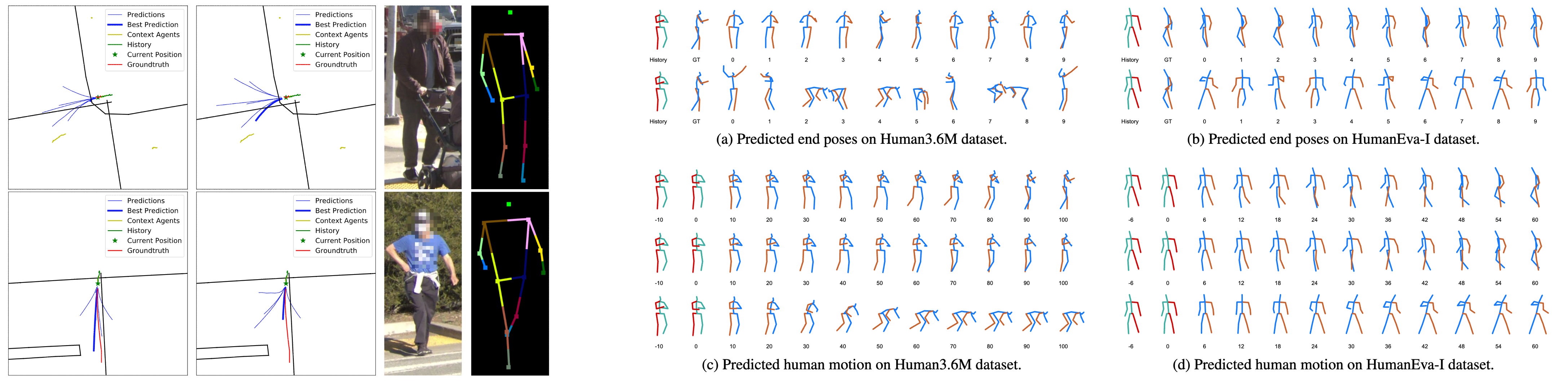

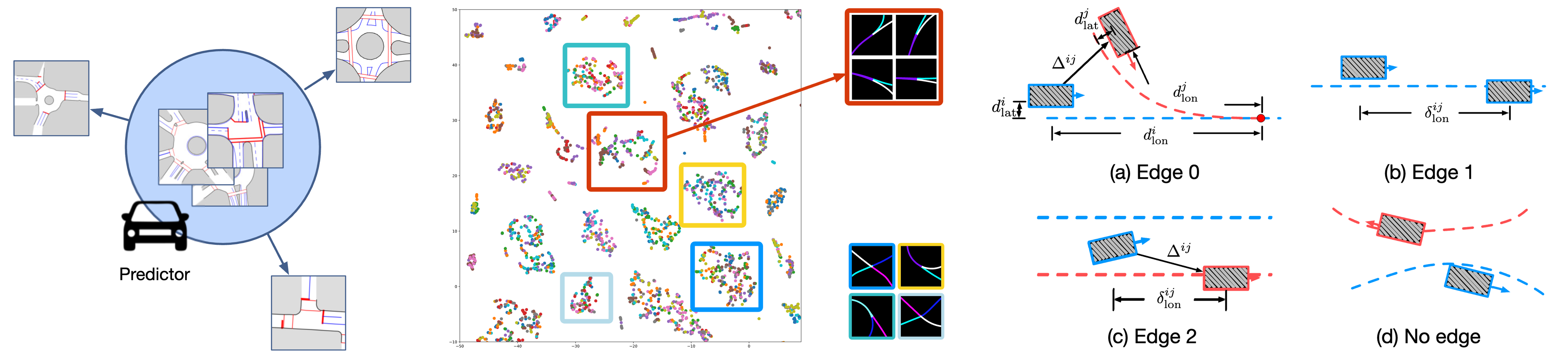

We investigate dynamic relational reasoning and interaction modeling under the context of the trajectory/motion prediction task, which aims to generate accurate, diverse future trajectory hypotheses or state sequences based on historical observations.

Our research introduced the first unified relational reasoning toolbox that systematically infers the underlying relations/interactions between entities at different scales (e.g., pairwise, group-wise) and different abstraction levels (e.g., multiplex) by learning dynamic latent interaction graphs and hypergraphs from observable states (e.g., positions) in an unsupervised manner.

The learned latent graphs are explainable and generalizable, significantly improving the performance of downstream tasks, including more accurate and generalizable prediction as well as safer and more efficient sequential decision making and control for mobile robots.

We also proposed a physics-guided relational learning approach for physical dynamics modeling, which accurately simulates and infers future evolution of physical systems.

Related Publications:

11. Multi-Agent Dynamic Relational Reasoning for Social Robot Navigation, submitted to IEEE Transactions on Robotics (T-RO), under review.

10. Interactive Autonomous Navigation with Internal State Inference and Interactivity Estimation, IEEE Transactions on Robotics (T-RO), 2024.

9. Grouptron: Dynamic Multi-Scale Graph Convolutional Networks for Group-Aware Crowd Trajectory Forecasting, ICRA 2022.

8. Important Object Identification with Semi-Supervised Learning for Autonomous Driving, ICRA 2022.

7. Learning Physical Dynamics with Subequivariant Graph Neural Networks, NeurIPS 2022.

6. Interaction Modeling with Multiplex Attention, NeurIPS 2022.

5. Spatio-Temporal Graph Dual-Attention Network for Multi-Agent Prediction and Tracking, IEEE Transactions on Intelligent Transportation Systems, 2022.

4. RAIN: Reinforced Hybrid Attention Inference Network for Motion Forecasting, ICCV 2021.

3. Continual Multi-agent Interaction Behavior Prediction with Conditional Generative Memory, IEEE Robotics and Automation Letters, 2021.

2. Spectral Temporal Graph Neural Network for Trajectory Prediction, ICRA 2021.

1. EvolveGraph: Multi-Agent Trajectory Prediction with Dynamic Relational Reasoning, NeurIPS 2020.

Trajectory and occupancy prediction is a critical research area in the field of autonomous driving.

As autonomous driving technology advances rapidly, accurately predicting the trajectories and occupancy of dynamic objects

such as vehicles and pedestrians has become essential for enhancing the safety and reliability of autonomous systems.

Effective trajectory and occupancy prediction enables autonomous vehicles to anticipate potential hazards in their environment,

thereby improving decision making processes and reducing the risk of accidents. This directly contributes to the development

of more robust and safe autonomous driving technologies. In our research, we have

1) developed effective solutions to model the diverse and uncertain behavior of various traffic participants (e.g., vehicles, pedestrians,

cyclists) and infer their future trajectories and occupancy of the scene in highly complex and interactive traffic scenarios;

2) investigated how to effectively detect and handle out-of-distribution (OOD) situations by improving the generalizability of prediction

frameworks, which achieves state-of-the-art performance in cross-dateset OOD evaluations;

3) introduced the first-of-its-kind cooperative motion prediction framework that advances the capabilities of connected

and automated vehicles (CAVs) in cooperative tracking and motion prediction, addressing the crucial need for safe and robust decision making in dynamic environments.

Related Publications:

24. Trends in Motion Prediction Toward Deployable and Generalizable Autonomy: A Revisit and Perspectives, under review.

23. UniOcc: A Unified Benchmark for Occupancy Forecasting and Prediction in Autonomous Driving, IEEE International Conference on Computer Vision (ICCV 2025).

22. CMP: Cooperative Motion Prediction with Multi-Agent Communication, IEEE Robotics and Automation Letters (RA-L), 2025.

21. Adaptive Prediction Ensemble: Improving Out-of-Distribution Generalization of Motion Forecasting, IEEE Robotics and Automation Letters (RA-L), 2025.

20. TrajEvo: Trajectory Prediction Heuristics Design via LLM-driven Evolution, under review.

19. Self-Supervised Multi-Future Occupancy Forecasting for Autonomous Driving, Robotics: Science and Systems (RSS 2025).

18. Scene Informer: Anchor-based Occlusion Inference and Trajectory Prediction in Partially Observable Environments, ICRA 2024.

17. Predicting Future Spatiotemporal Occupancy Grids with Semantics for Autonomous Driving, IV 2024.

16. Pedestrian Crossing Action Recognition and Trajectory Prediction with 3D Human Keypoints, ICRA 2023.

15. Game Theory-Based Simultaneous Prediction and Planning for Autonomous Vehicle Navigation in Crowded Environments, ITSC 2023.

14. A Cognition-Inspired Trajectory Prediction Method for Vehicles in Interactive Scenarios, IET Intelligent Transport Systems, 2023.

13. Dynamics-Aware Spatiotemporal Occupancy Prediction in Urban Environments, IROS 2022.

12. Spatio-Temporal Graph Dual-Attention Network for Multi-Agent Prediction and Tracking, IEEE Transactions on Intelligent Transportation Systems, 2022.

11. RAIN: Reinforced Hybrid Attention Inference Network for Motion Forecasting, ICCV 2021.

10. Shared Cross-Modal Trajectory Prediction for Autonomous Driving, CVPR 2021 (Oral).

9. LOKI: Long Term and Key Intentions for Trajectory Prediction, ICCV 2021.

8. Continual Multi-agent Interaction Behavior Prediction with Conditional Generative Memory, IEEE Robotics and Automation Letters (RA-L), 2021.

7. Multi-agent Driving Behavior Prediction across Different Scenarios with Self-supervised Domain Knowledge, ITSC 2021.

6. EvolveGraph: Multi-Agent Trajectory Prediction with Dynamic Relational Reasoning, NeurIPS 2020.

5. Generic Tracking and Probabilistic Prediction Framework and Its Application in Autonomous Driving, IEEE Transactions on Intelligent Transportation Systems, 2020.

4. Interaction-aware Multi-agent Tracking and Probabilistic Behavior Prediction via Adversarial Learning, ICRA 2019.

3. Conditional Generative Neural System for Probabilistic Trajectory Prediction, IROS 2019.

2. Coordination and Trajectory Prediction for Vehicle Interactions via Bayesian Generative Modeling, IV 2019.

1. Wasserstein Generative Learning with Kinematic Constraints for Probabilistic Interactive Driving Behavior Prediction, IV 2019.

Human intention and motion prediction is a vital research area that focuses on improving the safety and efficiency of interactions

between humans and robots. As robots are increasingly integrated into environments shared with humans, such as homes, workplaces,

and healthcare settings, it becomes crucial to predict human intentions and movements accurately. Understanding human intentions

allows robots to anticipate and respond to human actions in a way that is both intuitive and safe, thereby enhancing the quality of

human-robot interactions. This contributes to the development of more intelligent and adaptive robotic systems that can seamlessly

collaborate with humans in various real-world scenarios. In our research, we have

1) developed multi-modal prediction methods for predicting human intentions and generating future motions (e.g., trajectories,

human skeletons), which leverage fine-grained semantic and human appearance information.

2) proposed a systematic framework to identify generalizable dynamic relations (pairwise, group-wise) among human crowds.

3) introduced effective deep generative models to generate diverse, realistic human motions for human behavior simulation,

which enhances the performance of downstream tasks.

Related Publications:

14. Trends in Motion Prediction Toward Deployable and Generalizable Autonomy: A Revisit and Perspectives, under review.

13. UniOcc: A Unified Benchmark for Occupancy Forecasting and Prediction in Autonomous Driving, IEEE International Conference on Computer Vision (ICCV 2025).

12. TrajEvo: Trajectory Prediction Heuristics Design via LLM-driven Evolution, under review.

11. Multi-Agent Dynamic Relational Reasoning for Social Robot Navigation, submitted to IEEE Transactions on Robotics (T-RO), under review.

10. MATRIX: Multi-Agent Trajectory Generation with Diverse Contexts, ICRA 2024.

9. Pedestrian Crossing Action Recognition and Trajectory Prediction with 3D Human Keypoints, ICRA 2023.

8. Multi-Objective Diverse Human Motion Prediction with Knowledge Distillation, CVPR 2022 (Oral).

7. Interaction Modeling with Multiplex Attention, NeurIPS 2022.

6. Grouptron: Dynamic Multi-Scale Graph Convolutional Networks for Group-Aware Crowd Trajectory Forecasting, ICRA 2022.

5. RAIN: Reinforced Hybrid Attention Inference Network for Motion Forecasting, ICCV 2021.

4. Spectral Temporal Graph Neural Network for Trajectory Prediction, ICRA 2021.

3. LOKI: Long Term and Key Intentions for Trajectory Prediction, ICCV 2021.

2. EvolveGraph: Multi-Agent Trajectory Prediction with Dynamic Relational Reasoning, NeurIPS 2020.

1. Conditional Generative Neural System for Probabilistic Trajectory Prediction, IROS 2019.

How to generalize the prediction to different scenarios is largely underexplored.

In contrast to recent works that use the Cartesian coordinate system and global context images directly as input, we propose to leverage human prior knowledge including the comprehension of pairwise relations between agents and pairwise context information extracted by self-supervised learning approaches to attain an effective Frenet-based representation.

We demonstrate that our approach achieves superior performance in terms of overall performance, zero-shot, and few-shot transferability across different traffic scenarios with diverse layouts.

Related Publications:

1. Multi-Agent Driving Behavior Prediction across Different Scenarios with Self-Supervised Domain Knowledge, ITSC 2021.

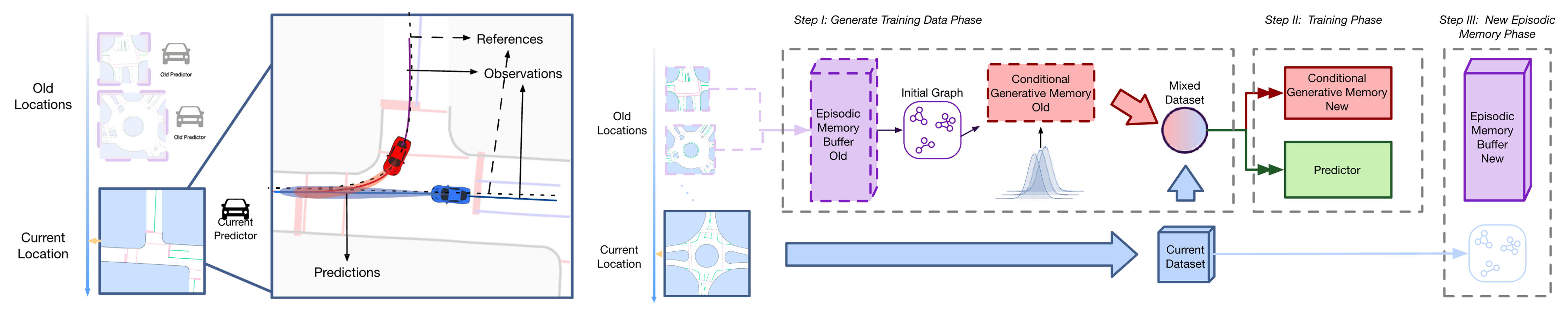

The current mainstream research focuses on how to achieve accurate prediction on one large dataset.

However, whether the multi-agent trajectory prediction model can be trained with a sequence of datasets, i.e., continual learning settings, remains a question.

Can the current prediction methods avoid catastrophic forgetting? Can we utilize the continual learning strategy in the multi-agent trajectory prediction application?

Motivated by the generative replay methods in continual learning literature, we propose a multi-agent interaction behavior prediction framework with a graph neural network-based conditional generative memory system to mitigate catastrophic forgetting.

To the best of our knowledge, this work is the first attempt to study the continual learning problem in multi-agent interaction behavior prediction problems.

We empirically show that several approaches in literature indeed suffer from catastrophic forgetting, and our approach succeeds in maintaining a low prediction error when datasets come sequentially.

Related Publications:

1. Continual Multi-Agent Interaction Behavior Prediction With Conditional Generative Memory, IEEE Robotics and Automation Letters, 2021.

We proposed a constrained mixture sequential Monte Carlo method that mitigates mode collapse in sequential Monte Carlo methods for tracking multiple targets and significantly improves tracking accuracy.

Since prediction is a step in state estimation, we also proposed that the prior update in the state estimation framework can be implemented with any learning-based interaction-aware prediction model.

The results in complex traffic scenarios show that using the prediction model outperforms purely physical models by a large margin due to the capability of relational reasoning.

In particular, our method performs significantly better when handling missing or noisy sensor measurements.

Related Publications:

4. Spatio-Temporal Graph Dual-Attention Network for Multi-Agent Prediction and Tracking, IEEE Transactions on Intelligent Transportation Systems, 2022.

3. Generic Tracking and Probabilistic Prediction Framework and Its Application in Autonomous Driving, IEEE Transactions on Intelligent Transportation Systems, 2021.

2. Interaction-aware Multi-agent Tracking and Probabilistic Behavior Prediction via Adversarial Learning, ICRA 2019.

1. Generic Vehicle Tracking Framework Capable of Handling Occlusions Based on Modified Mixture Particle Filter, IV 2018.